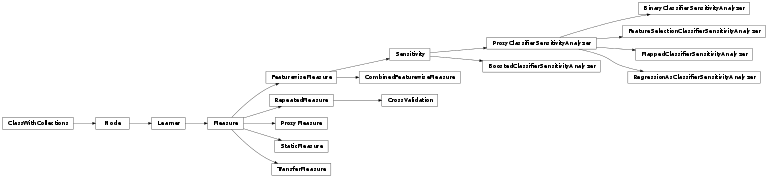

mvpa2.measures.base¶

Plumbing for measures: algorithms that quantify properties of datasets.

Besides the Measure base class this module also provides the

(abstract) FeaturewiseMeasure class. The difference between a general

measure and the output of the FeaturewiseMeasure is that the latter

returns a 1d map (one value per feature in the dataset). In contrast there are

no restrictions on the returned value of Measure except for that it

has to be in some iterable container.

Functions

asobjarray(x) |

Generates numpy.ndarray with dtype object from an iterable |

auto_null_dist(dist) |

Cheater for human beings – wraps dist if needed with some |

enhanced_doc_string(item, \*args, \*\*kwargs) |

Generate enhanced doc strings for various items. |

group_kwargs(prefixes[, assign, passthrough]) |

Decorator function to join parts of kwargs together |

hstack(datasets[, a, sa]) |

Stacks datasets horizontally (appending features). |

mean_mismatch_error(predicted, target) |

Computes the percentage of mismatches between some target and some predicted values. |

vstack(datasets[, a, fa]) |

Stacks datasets vertically (appending samples). |

Classes

AttrDataset(samples[, sa, fa, a]) |

Generic storage class for datasets with multiple attributes. |

AttributeMap([map, mapnumeric, ...]) |

Map to translate literal values to numeric ones (and back). |

BinaryClassifierSensitivityAnalyzer(\*args_, ...) |

Set sensitivity analyzer output to have proper labels |

BinaryFxNode(fx, space, \*\*kwargs) |

Extract a dataset attribute and call a function with it and the samples. |

BoostedClassifierSensitivityAnalyzer(\*args_, ...) |

Set sensitivity analyzers to be merged into a single output |

CombinedFeaturewiseMeasure([analyzers, sa_attr]) |

Set sensitivity analyzers to be merged into a single output |

ConditionalAttribute([enabled]) |

Simple container intended to conditionally store the value |

CrossValidation(learner[, generator, ...]) |

Cross-validate a learner’s transfer on datasets. |

Dataset(samples[, sa, fa, a]) |

Generic storage class for datasets with multiple attributes. |

FeatureSelectionClassifierSensitivityAnalyzer(...) |

Notes |

FeaturewiseMeasure([null_dist]) |

A per-feature-measure computed from a Dataset (base class). |

Learner([auto_train, force_train]) |

Common trainable processing object. |

MappedClassifierSensitivityAnalyzer(\*args_, ...) |

Set sensitivity analyzer output be reverse mapped using mapper of the |

Measure([null_dist]) |

A measure computed from a Dataset |

Node([space, pass_attr, postproc]) |

Common processing object. |

ProxyClassifierSensitivityAnalyzer(\*args_, ...) |

Set sensitivity analyzer output just to pass through |

ProxyMeasure(measure[, skip_train]) |

Wrapper to allow for alternative post-processing of a shared measure. |

RegressionAsClassifierSensitivityAnalyzer(...) |

Set sensitivity analyzer output to have proper labels |

RepeatedMeasure(node[, generator, callback, ...]) |

Repeatedly run a measure on generated dataset. |

Sensitivity(clf[, force_train]) |

Sensitivities of features for a given Classifier. |

Splitter(attr[, attr_values, count, ...]) |

Generator node for dataset splitting. |

StaticMeasure([measure, bias]) |

A static (assigned) sensitivity measure. |

TransferMeasure(measure, splitter, \*\*kwargs) |

Train and run a measure on two different parts of a dataset. |