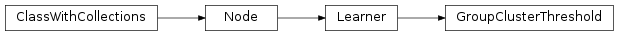

mvpa2.algorithms.group_clusterthr.GroupClusterThreshold¶

-

class

mvpa2.algorithms.group_clusterthr.GroupClusterThreshold(**kwargs)¶ Statistical evaluation of group-level average accuracy maps

This algorithm can be used to perform cluster-thresholding of searchlight-based group analyses. It implements a two-stage procedure that uses the results of within-subject permutation analyses, estimates a per feature cluster forming threshold (via bootstrap), and uses the thresholded bootstrap samples to estimate the distribution of cluster sizes in group-average accuracy maps under the NULL hypothesis, as described in [R3].

Note: this class implements a modified version of that algorithm. The present implementation differs in, at least, four aspects from the description in that paper.

- Cluster p-values refer to the probability of observing a particular cluster size or a larger one (original paper: probability to observe a larger cluster only). Consequently, probabilities reported by this implementation will have a tendency to be higher in comparison.

- Clusters found in the original (unpermuted) accuracy map are always included in the NULL distribution estimate of cluster sizes. This provides an explicit lower bound for probabilities, as there will always be at least one observed cluster for every cluster size found in the original accuracy map. Consequently, it is impossible to get a probability of zero for clusters of any size (see [2] for more information).

- Bootstrap accuracy maps that contain no clusters are counted in a dedicated size-zero bin in the NULL distribution of cluster sizes. This change yields reliable cluster-probabilities even for very low featurewise threshold probabilities, where (some portion) of the bootstrap accuracy maps do not contain any clusters.

- The method for FWE-correction used by the original authors is not provided. Instead, a range of alternatives implemented by the statsmodels package are available.

Moreover, this implementation minimizes the required memory demands and allows for computing large numbers of bootstrap samples without significant increase in memory demand (CPU time trade-off).

Instances of this class must be trained before than can be used to threshold accuracy maps. The training dataset must match the following criteria:

- For every subject in the group, it must contain multiple accuracy maps that are the result of a within-subject classification analysis based on permuted class labels. One map must corresponds to one fixed permutation for all features in the map, as described in [R3]. The original authors recommend 100 accuracy maps per subject for a typical searchlight analysis.

- It must contain a sample attribute indicating which sample is

associated with which subject, because bootstrapping average accuracy

maps is implemented by randomly drawing one map from each subject.

The name of the attribute can be configured via the

chunk_attrparameter.

After training, an instance can be called with a dataset to perform threshold and statistical evaluation. Unless a single-sample dataset is passed, all samples in the input dataset will be averaged prior thresholding.

Returns: Dataset

This is a shallow copy of the input dataset (after a potential averaging), hence contains the same data and attributes. In addition it includes the following attributes:

fa.featurewise_threshVector with feature-wise cluster-forming thresholds.

fa.clusters_featurewise_threshVector with labels for clusters after thresholding the input data with the desired feature-wise probability. Each unique non-zero element corresponds to an individual super-threshold cluster. Cluster values are sorted by cluster size (number of features). The largest cluster is always labeled with

1.fa.clusters_fwe_threshVector with labels for super-threshold clusters after correction for multiple comparisons. The attribute is derived from

fa.clusters_featurewise_threshby removing all clusters that do not pass the threshold when controlling for the family-wise error rate.a.clusterstatsRecord array with information on all detected clusters. The array is sorted according to cluster size, starting with the largest cluster in terms of number of features. The array contains the fields

size(number of features comprising the cluster),mean,median, min``,max,std(respective descriptive statistics for all clusters), andprob_raw(probability of observing the cluster of a this size or larger under the NULL hypothesis). If correction for multiple comparisons is enabled an additional fieldprob_corrected(probability after correction) is added.a.clusterlocationsRecord array with information on the location of all detected clusters. The array is sorted according to cluster size (same order as

a.clusterstats. The array contains the fieldsmax(feature coordinate of the maximum score within the cluster, andcenter_of_mass(coordinate of the center of mass; weighted by the feature values within the cluster.

Notes

Available conditional attributes:

calling_time+: Time (in seconds) it took to call the noderaw_results: Computed results before invoking postproc. Stored only if postproc is not None.trained_dataset: The dataset it has been trained ontrained_nsamples+: Number of samples it has been trained ontrained_targets+: Set of unique targets (or any other space) it has been trained on (if present in the dataset trained on)training_time+: Time (in seconds) it took to train the learner

(Conditional attributes enabled by default suffixed with

+)References

[R3] (1, 2, 3) Johannes Stelzer, Yi Chen and Robert Turner (2013). Statistical inference and multiple testing correction in classification-based multi-voxel pattern analysis (MVPA): Random permutations and cluster size control. NeuroImage, 65, 69–82. [R4] Smyth, G. K., & Phipson, B. (2010). Permutation P-values Should Never Be Zero: Calculating Exact P-values When Permutations Are Randomly Drawn. Statistical Applications in Genetics and Molecular Biology, 9, 1–12. Attributes

auto_trainWhether the Learner performs automatic trainingwhen called untrained. descrDescription of the object if any force_trainWhether the Learner enforces training upon every call. is_trainedWhether the Learner is currently trained. pass_attrWhich attributes of the dataset or self.ca to pass into result dataset upon call postprocNode to perform post-processing of results spaceProcessing space name of this node Methods

__call__(ds)generate(ds)Yield processing results. get_postproc()Returns the post-processing node or None. get_space()Query the processing space name of this node. reset()set_postproc(node)Assigns a post-processing node set_space(name)Set the processing space name of this node. train(ds)The default implementation calls _pretrain(),_train(), and finally_posttrain().untrain()Reverts changes in the state of this node caused by previous training Initialize instance of GroupClusterThreshold

Parameters: n_bootstrap : int, optional

Number of bootstrap samples to be generated from the training dataset. For each sample, an average map will be computed from a set of randomly drawn samples (one from each chunk). Bootstrap samples will be used to estimate a featurewise NULL distribution of accuracy values for initial thresholding, and to estimate the NULL distribution of cluster sizes under the NULL hypothesis. A larger number of bootstrap samples reduces the lower bound of probabilities, which may be beneficial for multiple comparison correction. Constraints: value must be convertible to type ‘int’, and value must be in range [1, inf]. [Default: 100000]

feature_thresh_prob : float, optional

Feature-wise probability threshold. The value corresponding to this probability in the NULL distribution of accuracies will be used as threshold for cluster forming. Given that the NULL distribution is estimated per feature, the actual threshold value will vary across features yielding a threshold vector. The number of bootstrap samples need to be adequate for a desired probability. A

ValueErroris raised otherwise. Constraints: value must be convertible to type ‘float’, and value must be in range [0.0, 1.0]. [Default: 0.001]chunk_attr

Name of the attribute indicating the individual chunks from which a single sample each is drawn for averaging into a bootstrap sample. [Default: ‘chunks’]

fwe_rate : float, optional

Family-wise error rate for multiple comparison correction of cluster size probabilities. Constraints: value must be convertible to type ‘float’, and value must be in range [0.0, 1.0]. [Default: 0.05]

multicomp_correction : {bonferroni, sidak, holm-sidak, holm, simes-hochberg, hommel, fdr_bh, fdr_by, None}, optional

Strategy for multiple comparison correction of cluster probabilities. All methods supported by statsmodels’

multitestare available. In addition,Nonecan be specified to disable correction. Constraints: value must be one of (‘bonferroni’, ‘sidak’, ‘holm-sidak’, ‘holm’, ‘simes-hochberg’, ‘hommel’, ‘fdr_bh’, ‘fdr_by’, None). [Default: ‘fdr_bh’]n_blocks : int, optional

Number of segments used to compute the feature-wise NULL distributions. This parameter determines the peak memory demand. In case of a single segment a matrix of size (n_bootstrap x nfeatures) will be allocated. Increasing the number of segments reduces the peak memory demand by that roughly factor. Constraints: value must be convertible to type ‘int’, and value must be in range [1, inf]. [Default: 1]

n_proc : int, optional

Number of parallel processes to use for computation. Requires

joblibexternal module. Constraints: value must be convertible to type ‘int’, and value must be in range [1, inf]. [Default: 1]enable_ca : None or list of str

Names of the conditional attributes which should be enabled in addition to the default ones

disable_ca : None or list of str

Names of the conditional attributes which should be disabled

auto_train : bool

Flag whether the learner will automatically train itself on the input dataset when called untrained.

force_train : bool

Flag whether the learner will enforce training on the input dataset upon every call.

space : str, optional

Name of the ‘processing space’. The actual meaning of this argument heavily depends on the sub-class implementation. In general, this is a trigger that tells the node to compute and store information about the input data that is “interesting” in the context of the corresponding processing in the output dataset.

pass_attr : str, list of str|tuple, optional

Additional attributes to pass on to an output dataset. Attributes can be taken from all three attribute collections of an input dataset (sa, fa, a – see

Dataset.get_attr()), or from the collection of conditional attributes (ca) of a node instance. Corresponding collection name prefixes should be used to identify attributes, e.g. ‘ca.null_prob’ for the conditional attribute ‘null_prob’, or ‘fa.stats’ for the feature attribute stats. In addition to a plain attribute identifier it is possible to use a tuple to trigger more complex operations. The first tuple element is the attribute identifier, as described before. The second element is the name of the target attribute collection (sa, fa, or a). The third element is the axis number of a multidimensional array that shall be swapped with the current first axis. The fourth element is a new name that shall be used for an attribute in the output dataset. Example: (‘ca.null_prob’, ‘fa’, 1, ‘pvalues’) will take the conditional attribute ‘null_prob’ and store it as a feature attribute ‘pvalues’, while swapping the first and second axes. Simplified instructions can be given by leaving out consecutive tuple elements starting from the end.postproc : Node instance, optional

Node to perform post-processing of results. This node is applied in

__call__()to perform a final processing step on the to be result dataset. If None, nothing is done.descr : str

Description of the instance

Attributes

auto_trainWhether the Learner performs automatic trainingwhen called untrained. descrDescription of the object if any force_trainWhether the Learner enforces training upon every call. is_trainedWhether the Learner is currently trained. pass_attrWhich attributes of the dataset or self.ca to pass into result dataset upon call postprocNode to perform post-processing of results spaceProcessing space name of this node Methods

__call__(ds)generate(ds)Yield processing results. get_postproc()Returns the post-processing node or None. get_space()Query the processing space name of this node. reset()set_postproc(node)Assigns a post-processing node set_space(name)Set the processing space name of this node. train(ds)The default implementation calls _pretrain(),_train(), and finally_posttrain().untrain()Reverts changes in the state of this node caused by previous training